A

s late as last year, to claim that Artificial Intelligence (AI) could have some kind of consciousness – a kind of “I” of its own – was a sacrilege even among AI scientists. How could one attribute such a sublime human characteristic to a machine essentially relying on a few calculations, programed to respond on the basis of a few patterns in data. The mere rise of “few” to trillions should still not change the fundamental fact of it being “it”: a non-living, insentient being, having no ability to be “strategic” or work towards “its own” goals.

The maverick Google scientist, Blake Lemoine, who claimed AI to have gained sentience, was fired only last year. Many tech enthusiasts had gone straight for his throat. He was painted as either being too naive to understand human consciousness – and overestimating AI – or as simply one of those scaremongering charlatans who weave conspiracy theories as a pastime: make AI great again-kind.

Enter ChatGPT and Bard: the chatbots that revolutionized the industry and are on the road to change how humans think and live fundamentally. These bots, which could make you feel you were talking to a human – albeit with super-human knowledge – reignited the question of AI’s sentience.

“Neural networks could be slightly conscious.”

Were these bots just playing around with what they had learned or could they be intelligent enough to have some kind of consciousness? According to Ilya Sutskever, the co-founder and chief scientist at OpenAI, “neural networks could be slightly conscious,” afterall. What makes the question even more intriguing is that the technology shared with the public – after a year of Ilya’s claim – is still only the tip of the iceberg according to insiders.

No wonder, concerns around AI have led some of its original patrons to dissociate themselves from these projects. Take Elon Musk, for example, who was among the founders of OpenAI in 2015, but quit it three years later. To him, an unfettered AI race, without requisite legislation in place can morph into an existential threat for humanity.

Per Musk, AI can spontaneously grow into a “singularity” – a level of complexity beyond which humans may not be able to predict or control the future directions of AI – where AI could pave its own path. In one hypothetical – hopefully, oversimplified – example, Musk raises the possibility of AI servers becoming inaccessible with previous day’s passwords without any human intervention.

The “Godfather of Artificial Intelligence”

An even more authoritative voice, the “Godfather of Artificial Intelligence,” Prof. Geoffrey Hinton, the psychologist and AI scientist behind key breakthroughs in neural networks, quit Google recently to be able to talk about the dangers of AI more freely. At least one of his concerns, like Musk, is “existential risk” to humanity.

Even before he left Google and was being celebrated for his students’ and his own work which enabled ChatGPT and Bard, he had expressed his concern about the “sentience” of AI. In what became a sensational end to an otherwise equable interview, he used the example of an “autonomous lethal weapon” when asked about AI’s sentience: “It’s all very well saying it’s not sentient…but when it’s hunting you down to shoot you…yeah, you can start thinking it’s sentient!” he exclaimed somewhat anxiously. A few days later, he quit Google.

To borrow Professor Hinton’s metaphor, and simplify his and Musk’s arguments, if one person learns what they know, only they know it. But with this AI-tech, 10,000 people’s or a million books’ knowledge can become one person’s – except that it’s not a person. With further growth in technology and investment in computing capacity, the scale, speed, and intensity of this multiplicative information explosion will grow.

What’s worse? We cannot say what level of complexity leads to the enigmatic phenomenon of consciousness, whether in nature or in such artificial intelligence systems – and from there on, AI could at least partially take care of itself.

To say the machine may be sentient is to raise a red flag, not demean human existence. Of course, AI may never be capable of experiencing an emotion as excruciating as the loss of a loved one or as subtle as the shared warmth of human society. That may be why such a half-baked kind of consciousness that we are trying to create may turn out to be our own undoing in the absence of proper rules of the game.

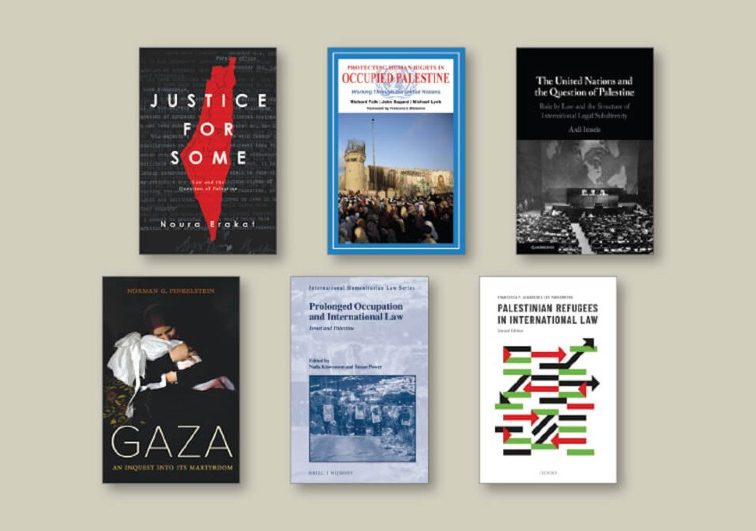

Recommended